분류 [Medicine] Study by Incheon National University Could Transform Skin Cancer Detection with Near-Perfect Accuracy

- NO

- 414797

- Date

- 2025-11-06

- Modify Date

- 2025-11-06

- Writer

- 연구기획관리과 (032-835-9322~5)

- Count

- 1158

PRESS RELEASE

Study by Incheon National University Could Transform Skin Cancer Detection with Near-Perfect Accuracy

New deep learning system integrates images and clinical details, improving early skin cancer diagnosis and aiding in smart healthcare

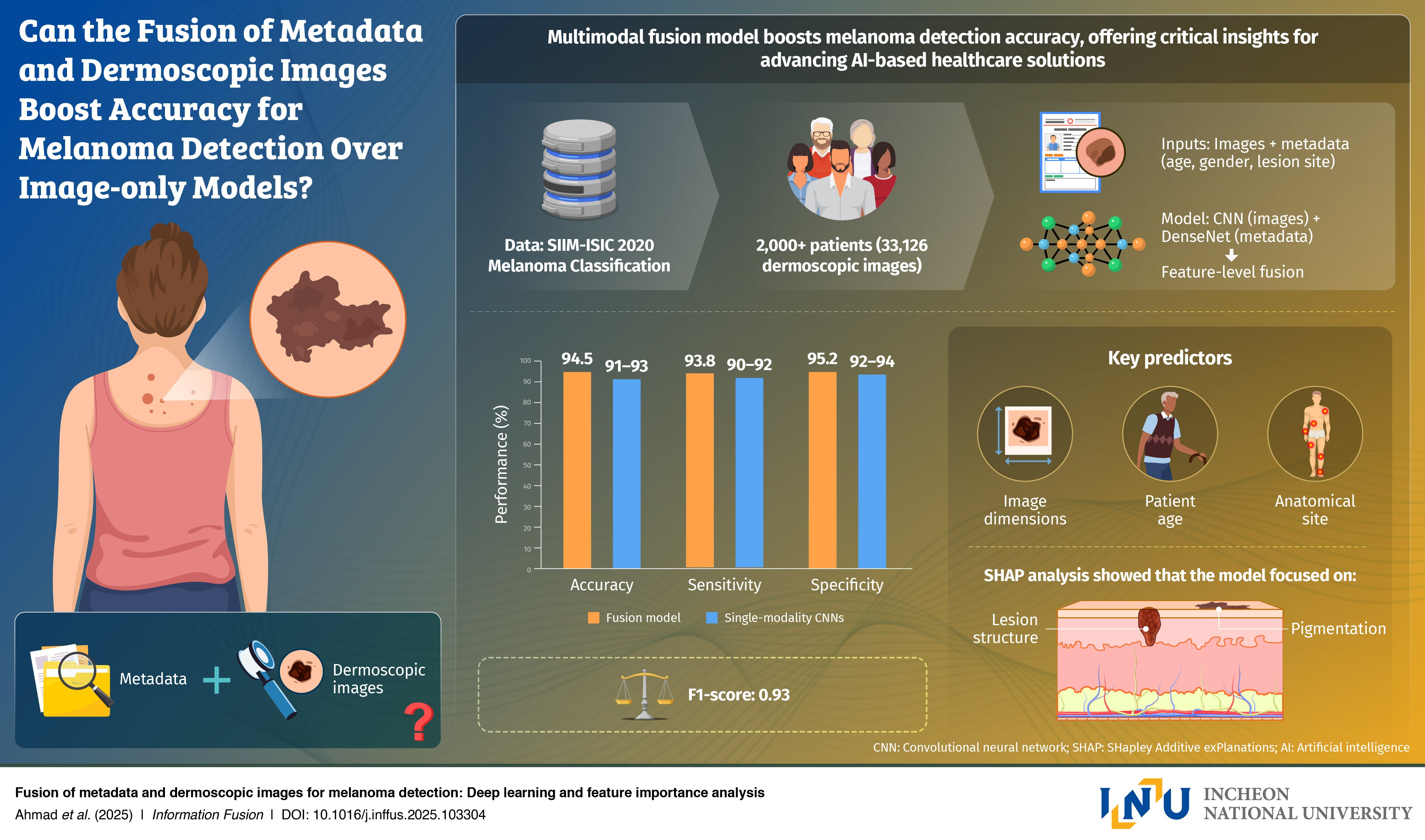

Melanoma is the deadliest form of skin cancer, responsible for thousands of deaths each year; but early detection can dramatically increase survival rates. Now, scientists have developed an advanced artificial intelligence (AI) model that can detect melanoma more accurately by combining skin images with patient metadata. The study achieved 94.5% accuracy, marking a breakthrough in AI-powered early detection of melanoma, thereby advancing smart healthcare systems.

Image title: Artificial intelligence sees what doctors can’t: Combining patient data and images to detect melanoma

Image caption: A new deep learning system developed by an international research team detects melanoma with 94.5% accuracy by fusing dermoscopic images and patient metadata such as age, gender, and lesion location. The approach enhances diagnostic precision, transparency, and access to early skin cancer detection through smart healthcare technology.

Image credit: Professor Gwangill Jeon from Incheon National University, Korea

License type: Original content

Usage restrictions: Cannot be reused without permission.

Melanoma remains one of the hardest skin cancers to diagnose because it often mimics harmless moles or lesions. While most artificial intelligence (AI) tools rely on dermoscopic images alone, they often overlook crucial patient information (like age, gender, or where on the body the lesion appears) that can improve diagnostic accuracy. This highlights the importance of multimodal fusion models that can enable high precision diagnosis.

To bridge that gap, Professor Gwangill Jeon from the Department of Embedded Systems Engineering, Incheon National University, South Korea, in collaboration with the University of West of England (UK), Anglia Ruskin University (UK), and the Royal Military College of Canada, created a deep learning model that integrates patient data and dermoscopic images. The study was made available online on June 06, 2025, and will be published in Volume 124 of the journal Information Fusion on December 01, 2025.

“Skin cancer, particularly melanoma, is a disease in which early detection is critically important for determining survival rates,” says Prof. Jeon. “Since melanoma is difficult to diagnose based solely on visual features, I recognized the need for AI convergence technologies that can consider both imaging data and patient information.”

Using the large-scale SIIM-ISIC melanoma dataset, which contains over 33,000 dermoscopic images paired with clinical metadata, the team trained their AI model to recognize subtle links between what appears on the skin and who the patient is. The model achieved 94.5% accuracy and an F1-score of 0.94, outperforming popular image-only models such as ResNet-50 and EfficientNet.

The researchers also performed feature importance analysis to make the system more transparent and robust. Factors like lesion size, patient age, and anatomical site were found to contribute strongly for accurate detection. These insights can help doctors understand and provide a roadmap to trust the diagnosis performed by AI.

Prof. Jeon says, “The model is not merely designed for academic purposes. It could be used as a practical tool that could transform real-world melanoma screening. This research can be directly applied to developing an AI system that analyzes both skin lesion images and basic patient information to enable early detection of melanoma.”

In the future, the model could power smartphone-based skin diagnosis applications, telemedicine systems, or AI-assisted tools in dermatology clinics, helping reduce misdiagnosis rates and improve access to care. Prof. Jeon explains, “The study represents a step forward toward personalized diagnosis and preventive medicine through AI convergence technology.”

The study highlights how multimodal AI can bridge the gap between machine learning and clinical decision-making, paving the way for more accurate, accessible, and trustworthy skin cancer diagnostics.

Reference

Authors: Misbah Ahmada,b, Imran Ahmedc, Abdellah Chehrid, Gwangill Jeone*

|

|

|

|

Title of original paper: |

Fusion of metadata and dermoscopic images for melanoma detection: Deep learning and feature importance analysis |

|

Journal: |

Information Fusion |

|

DOI: |

|

|

Affiliations: |

aCentre for Machine Vision, Bristol Robotics Laboratory, University of West of England, UK bDepartment of Animal and Agriculture, Hartpury University, UK cSchool of Computing and Information Science, Anglia Ruskin University, UK dDepartment of Mathematics and Computer Science, Royal Military College of Canada (RMC), Canada eDepartment of Embedded Systems Engineering, Incheon National University, Republic of Korea |

About the author Professor Gwanggil Jeon

Prof. Gwanggil Jeon received his B.S., M.S., and Ph.D. (summa cum laude) degrees from the Department of Electronics and Computer Engineering at Hanyang University, Seoul, Korea, in 2003, 2005, and 2008, respectively. From 2009 to 2011, he was with the School of Information Technology and Engineering, University of Ottawa, Ottawa, ON, Canada, as a Post-doctoral Fellow. From 2011 to 2012, he served as an Assistant Professor at the Graduate School of Science and Technology at Niigata University, Japan. From 2014 to 2015 and 2015.06 to 2015.07, he remained a Visiting Scholar at Centre de Mathématiques et Leurs Applications (CMLA), École Normale Supérieure Paris-Saclay (ENS-Cachan) in France. Dr. Jeon is currently a full-time Professor at Incheon National University in Korea.